The AtmosMaker Plugin

Posted on March 21, 2021

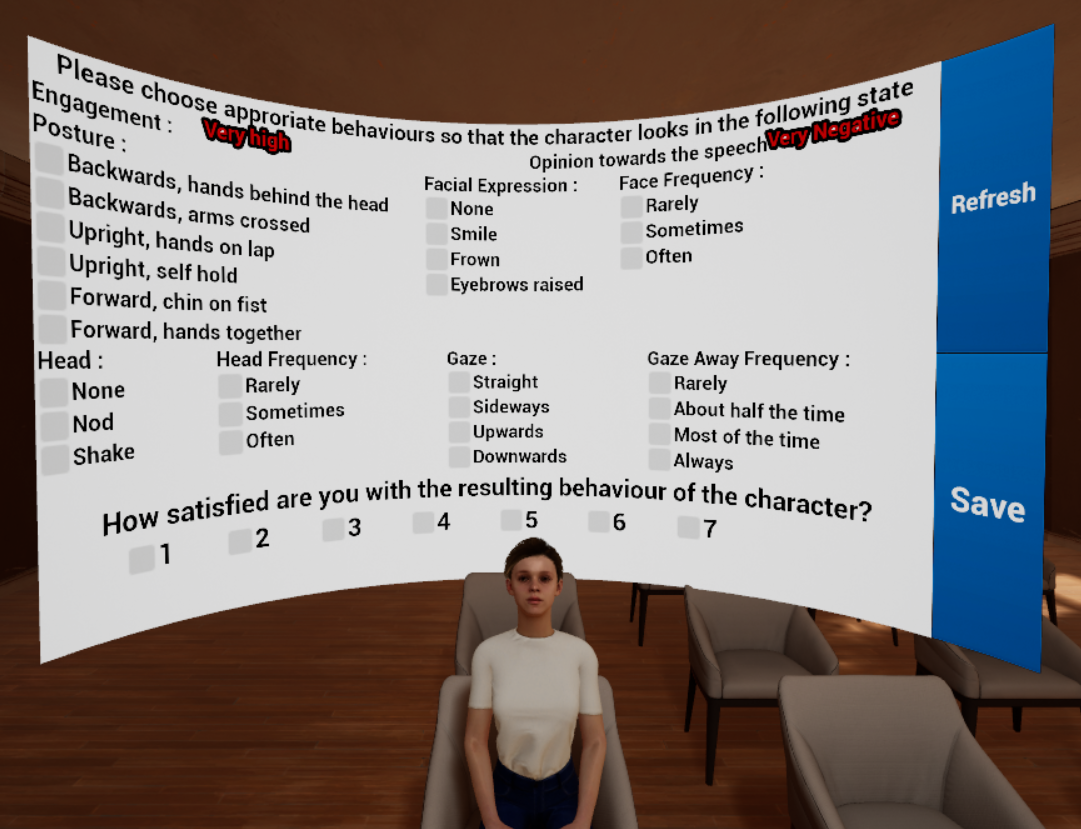

The AtmosMaker Plugin is the system developped within the project Virtual Audience which aims at providing a virtual audience simulator in virtual reality that allows you to easily build and experience a wide variety of audience attitudes with small or large groups of virtual agents. The system implements a virtual audience behaviour model with developped based on the existing literrature. So far the system allows you to design and control your audiences but it does not include animations or 3D characters.

If the system is originally made for a teacher training system we are now working with therapists to help people who stutter and others with social disabilities. You want to know more about our projects checkout the HCI-Group page of the project or this page to get the last features.

The Virtual Audience Team: