Contributions

Github Source code : HERE

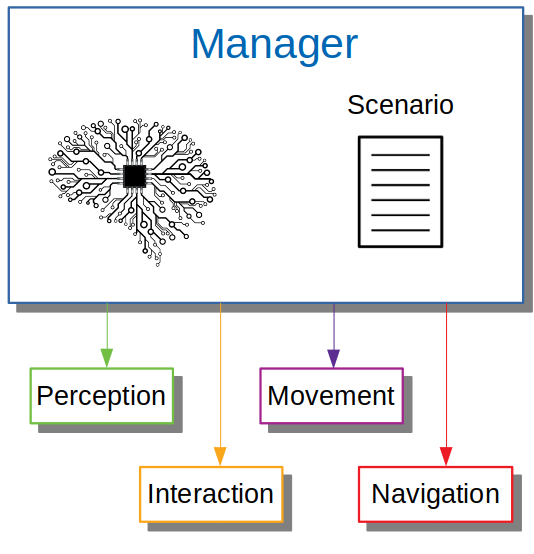

I. Overview of Our Architecture

Our architecture is built upon 5 ROS modules : Reasoning, Perception, Navigation, Movement and Interaction.

II. Main Contributions

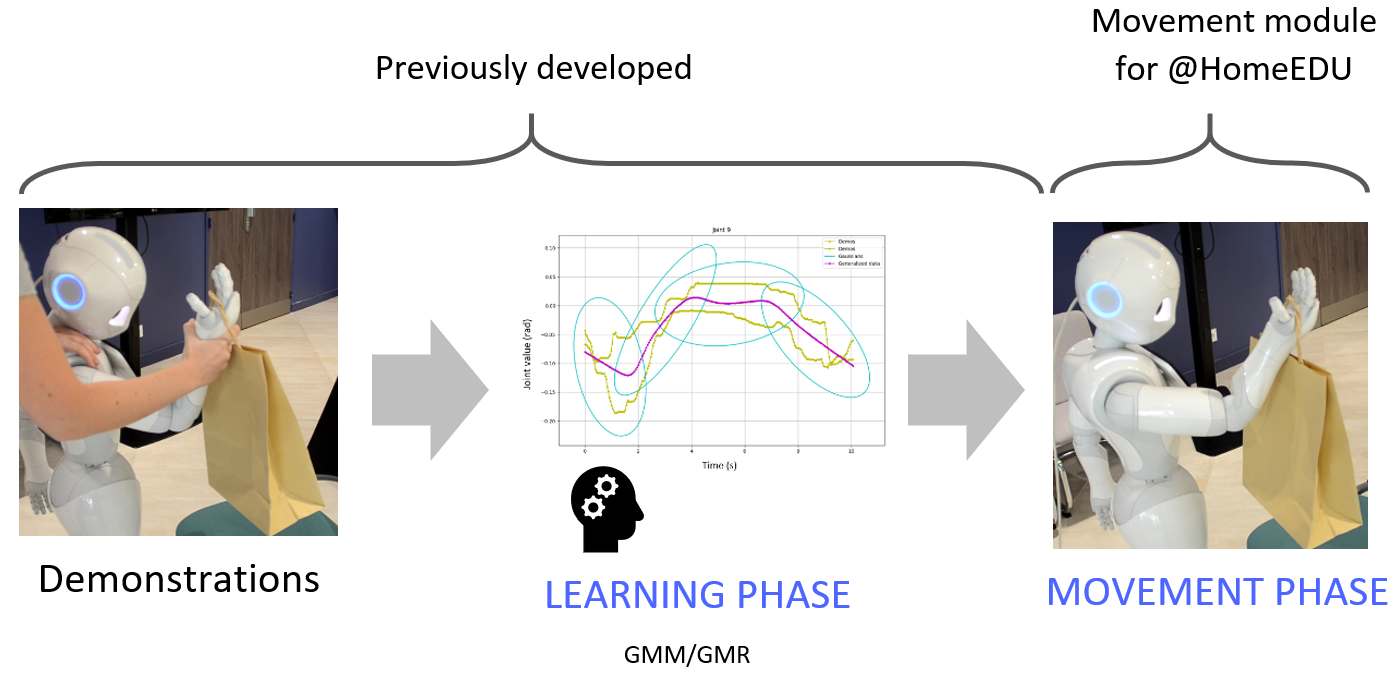

1. Movement Learning From Demonstrations

After developing a generic architecture which reproduces a movement, we need to learn a movement from multiple demonstrations. The chosen model is the combination of GMM (Gaussian Mixture Model) and GMR (Gaussian Mixture Regression). GMM generalizes the movement with Gaussians. Then, GMR regenerates the movement using the GMM output. Each demonstration and generated movement are saved in their respective files. Thus, the user can make demonstrations of a movement, which he has chosen the name, then starts the learning. When the user chooses to start the learned movement, the movement will work in real-time because the learning was done before. The Pepper and Nao robot were tested on simulation using SoftBank Robotics' QiBullet simulator.

More information about this project

Example video

2. Human-Robot Interaction

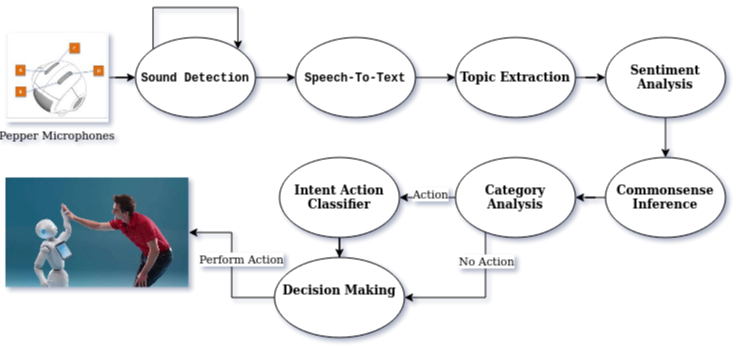

2.1. Dialog Pipeline

Another part of our work is focus on Human Robot Interaction (HRI) and especially Dialog. We provide a solution to handle Speech Detection, Speech Recognition and Natural Language Processing (NLP) in order to answer Human requests. This pipeline detects the user's input voice by analysing the evolution of ambient sound level. The Speech Recognition part is powered by the Google API Google Cloud Speech-To-Text and NLP by using learning approaches like Mbot combined with rule-based APIs (e.g. Spacy). In addition to those solutions, we use a Dialog Act classifier. This classifier is used to adapt the system response to the type of dialog. For example if the sentence is considered as a Action-Command the system will run Mbot as an intents analysis, otherwise the classic rule-based model will be used. By using this classifier as a pre-processing unit we save time and resources and prevent intent classifier mistakes. We are also implementing a Sentiment Analysis Module to classify user's utterance between "negative", "neutral" and "positive" sentiment. This allow us to adapt the Pepper response (using a basic rule-based system). Finally we use the new framework COMeT for Commonsense Inference to retrieve user intents and needs. With those information we are able to provide suggestion to the user for next commands.

2.2. Speaker Recognition

We provide a solution that recognize a speaker voice by learning, no matter the speaking language. The proposed model exploits SincNet, which requires as learning parameters only lower and higher cut frequencies, and therefore reduces the number of parameters learned per each filter and makes this number of parameters independent of the range. In addition, we combined both the Sinc Net and a Siamese Network with an algorithm to train Siamese neural networks in speaker identification.

III. Others

1. Reasoning

The robot reasoning (manager) is a high level structure. Modules output are stored as object instance and available to be used as an input information later. The manager executes tasks in the required order, scheduling Pepper behavior. It also handles task priority. This architecture was designed to easily add new behaviors. A new behavior can be implemented without editing any external modules. In order to bypass execution lags, orders are canceled if they take too long to execute.

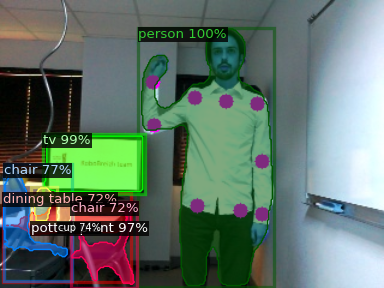

2. Perception

Mask R-CNN was chosen over other state-of-the-art object detection algorithms due to its ability to detect object with pixel-level precision. Compared to other solutions, this minimizes the noise added by the background and reduces the risk of inaccurately localizing the object. This level of precision is required when measuring the distance between Pepper and an object. Our module is also capable of understanding the current state of objects and person. For instance, by using the positions of the chairs and persons in an image and how they overlap, it’s possible to determine whether the chairs are available or taken. Additionally, OpenPose is used to extract the positions of all the hands in an image and thus whether someone is waving. We also use Convolutional Neural Networks (CNN) to detect Age and Gender of people using face recognition.

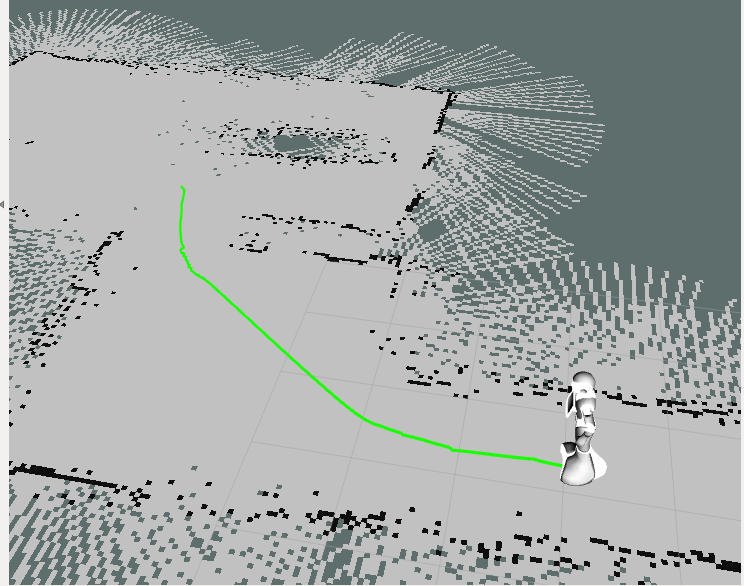

3. Navigation

Our approach for navigation is based on a specific implementation for Pepper using the ROS Navigation Stack. First mapping is provided by Gmapping ROS package which provides a tool to generate 2D occupancy map from lasers sensors data using SLAM. Unfortunately, the data provided by Pepper lasers sensors are not accurate enough to build a detailed map. Consequently, in addition to those inputs, we decided to feed the mapping node with data from the Pepper's RGB-D camera. Once a valid map is obtained, it can be used for navigation. Thus, a real-time localization will be performed using amcl ROS node. The next step is to compute a path through a given goal and achieve it. This is performed by move_base Node that will define a global and local planner for the robot to follow.

4. Simulation

All our code is working with Gazebo and QiBullet Simulation.

Gazebo Demonstration Video

2022

RoboCup@Home 2022 : Qualification Application

Social Standard Platform League (SSPL)

Qualification Video

Team Description Paper

2021

RoboCup@Home 2021 Virtual Competition: 3rd

Social Standard Platform League (SSPL)

"The RoboCup@Home league aims to develop service and assistive robot technology with high relevance for future personal domestic applications. It is the largest international annual competition for autonomous service robots and is part of the RoboCup initiative."

RoboCup@Home league

2020

RoboCup@Home Education Online Challenge: 1st

Social Standard Platform League (SSPL)

RoboCup@Home Education is an educational initiative in RoboCup@Home that promotes educational efforts to boost RoboCup@Home participation and artificial intelligence (AI)-focused service robot development.

Robocup@Home Education Online Challenge official website

Finale Demonstration :

During the finals we compet against others internationnal teams. We choose to show all the Pepper robot abilities in one scenario. This scenario introduce Pepper as an hotel bellboy interacting with customers.